4/10/2014

We've moved!

Hey, ELEKS Labs blog has moved to a new hosting. You can find it there: www.elekslabs.com

4/08/2014

Google Glass in Warehouse Automation

Imagine that you operate a huge warehouse, where you store all the awesome goods you sell.

Well, one of our customers does.

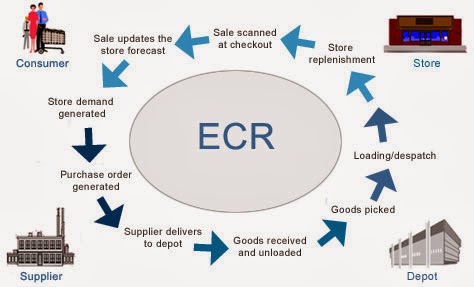

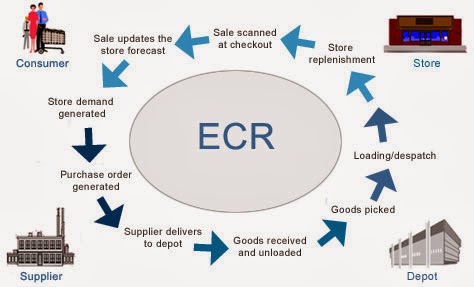

For instance, if you run a supermarket, your process consists of at least these major modules:

If you relax for a minute, you could probably brainstorm several ideas of how Google Glass can be applied in warehouse.

But let's focus on 1 link on that picture: "Goods received and unloaded". If you break it down into pieces, it seems to be a rather simple process:

We combined both of the approaches. And that's what we created:

Pretty cool, yeah? Here you can see automation of all basic tasks we mentioned above. It is incredible how useful Google Glass is for warehouse automation.

In the next section we're going to dive deep into technical details, so in case you're software engineer you can find some interesting pieces of code below.

So, as usual, add permissions to

Glass is a beta product, so you need some hacks. When you implement

P.S. Unfortunately, Glass has now just 1 focus mode -- "infinity". I hope, things will get better in the future.

As you can remember, we want to avoid manual input of specific data from packages. Some groceries have expiry date. Let's implement recognition of expiry date via voice. In this way, a person would take a package with both hands, scan a barcode, say expiry date while handling a package and get back to another package.

From technical standpoint, we need to solve 2 issues:

Here's how you can use it in your project. Add

P.S. You can find full source code for this example here: https://github.com/eleks/glass-warehouse-automation.

Well, one of our customers does.

For instance, if you run a supermarket, your process consists of at least these major modules:

- Online order management & checkout.

- Order packaging.

- Incoming goods processing and warehouse logistics.

- Order delivery.

Source: http://www.igd.com/our-expertise/Supply-chain/In-store/3459/On-Shelf-Availability

If you relax for a minute, you could probably brainstorm several ideas of how Google Glass can be applied in warehouse.

But let's focus on 1 link on that picture: "Goods received and unloaded". If you break it down into pieces, it seems to be a rather simple process:

- Truck comes to your warehouse.

- You unload the truck.

- Add all items into your Warehouse Management system.

- Place all items into specific place inside the warehouse.

- pick a box with both of your hands

- handle it to the shelf

- go back to the truck & repeat

We combined both of the approaches. And that's what we created:

Pretty cool, yeah? Here you can see automation of all basic tasks we mentioned above. It is incredible how useful Google Glass is for warehouse automation.

In the next section we're going to dive deep into technical details, so in case you're software engineer you can find some interesting pieces of code below.

Working with Camera in Google Glass

We wanted to let person grab a package and scan it's barcode at the same time. First of all, we tried implementing barcode scanning routines for Glass. That's great that Glass is actually an Android device.So, as usual, add permissions to

AndroidManifest.xml, initialize your camera and have fun.<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.camera.autofocus" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

Glass is a beta product, so you need some hacks. When you implement

surfaceChanged(...) method, don't forget to add parameters.setPreviewFpsRange(30000, 30000); call. Eventually, your surfaceChanged(...) should look like this:public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

...

Camera.Parameters parameters = mCamera.getParameters();

Camera.Size size = getBestPreviewSize(width, height, parameters);

parameters.setPreviewSize(size.width, size.height);

parameters.setPreviewFpsRange(30000, 30000);

mCamera.setParameters(parameters);

mCamera.startPreview();

...

}

P.S. Unfortunately, Glass has now just 1 focus mode -- "infinity". I hope, things will get better in the future.

Working with Barcodes in Google Glass

Once you see a clear picture inside your prism, let's proceed with barcode scanning. There're some barcode scanning libraries out there:zxing, zbar, etc.

We grabbed a copy of zbar library and integrated it into our project.- Download a copy of it.

- Copy

armeabi-v7afolder andzbar.jarfile intolibsfolder of your project. - Use it with camera:

static {

System.loadLibrary("iconv");

}

onCreate(...) of your activity:setContentView(R.layout.activity_camera);

// ...

scanner = new ImageScanner();

scanner.setConfig(0, Config.X_DENSITY, 3);

scanner.setConfig(0, Config.Y_DENSITY, 3);

Camera.PreviewCallback instance like this.

You'll scan image and receive scanning results in it.Camera.PreviewCallback previewCallback = new Camera.PreviewCallback() {

public void onPreviewFrame(byte[] data, Camera camera) {

Camera.Size size = camera.getParameters().getPreviewSize();

Image barcode = new Image(size.width, size.height, "NV21");

barcode.setData(data);

barcode = barcode.convert("Y800");

int result = scanner.scanImage(barcode);

if (result != 0) {

SymbolSet syms = scanner.getResults();

for (Symbol sym : syms) {

doSmthWithScannedSymbol(sym);

}

}

}

};

barcode.convert("Y800") call and scanner would still work.

Just keep in mind that Android camera returns images in NV21 format by default.

zbar's ImageScanner supports only Y800 format.

That's it.

Now you can scan barcodes with your Glass :)Handling Voice input in Google Glass

Apart from Camera, Glass has some microphones, which let you control it via voice. Voice control looks natural here, although people around you would find it disturbing. Especially, when it can't recognize "ok glass, google what does the fox say" 5 times in a row.As you can remember, we want to avoid manual input of specific data from packages. Some groceries have expiry date. Let's implement recognition of expiry date via voice. In this way, a person would take a package with both hands, scan a barcode, say expiry date while handling a package and get back to another package.

From technical standpoint, we need to solve 2 issues:

- perform speech to text recognition

- perform date extraction via free-form text analysis

Intent intent = new Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH);

intent.putExtra(RecognizerIntent.EXTRA_PROMPT, "Say expiry date:");

startActivityForResult(intent, EXPIARY_DATE_REQUEST);

onActivityResult(...) in your Activity, of course:@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

if (resultCode == Activity.RESULT_OK && requestCode == EXPIARY_DATE_REQUEST) {

doSmthWithVoiceResult(data);

} else {

super.onActivityResult(requestCode, resultCode, data);

}

}

public void doSmthWithVoiceResult(Intent intent) {

List<String> results = intent.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS);

Log.d(TAG, ""+results);

if (results.size() > 0) {

String spokenText = results.get(0);

doSmthWithDateString(spokenText);

}

}

- in 2 days

- next Thursday

- 25th of May

natty.

It does exactly this: it is a natural language date parser written in Java.

You can even try it online here.Here's how you can use it in your project. Add

natty.jar to your project.

If you use maven, then add it via:<dependency>

<groupId>com.joestelmach</groupId>

<artifactId>natty</artifactId>

<version>0.8</version>

</dependency>

libs folder you'll need natty with dependencies.

Download all of them:- stringtemplate-3.2.jar

- antlr-2.7.7.jar

- antlr-runtime-3.2.jar

- natty-0.8.jar

Parser class in your source:// doSmthWithDateString("in 2 days");

public static Date doSmthWithDateString(String text) {

Parser parser = new Parser();

List<DateGroup> groups = parser.parse(text);

for (DateGroup group : groups) {

List<Date> dates = group.getDates();

Log.d(TAG, "PARSED: " + dates);

if (dates.size() > 0) {

return dates.get(0);

}

}

return null;

}

natty does a pretty job of transforming your voice into Date instances.Instead of Summary

Glass is an awesome device, but it has some issues now. Even though it's still in beta, you'll get tons of joy, while developing apps for it! So grab a device, download SDK and have fun!P.S. You can find full source code for this example here: https://github.com/eleks/glass-warehouse-automation.

Subscribe to:

Comments (Atom)