4/10/2014

We've moved!

Hey, ELEKS Labs blog has moved to a new hosting. You can find it there: www.elekslabs.com

4/08/2014

Google Glass in Warehouse Automation

Imagine that you operate a huge warehouse, where you store all the awesome goods you sell.

Well, one of our customers does.

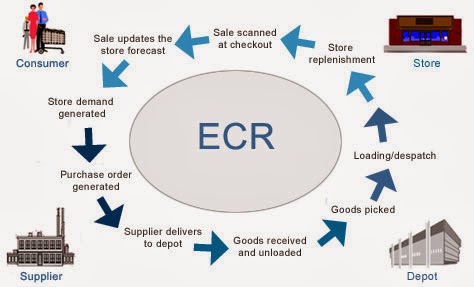

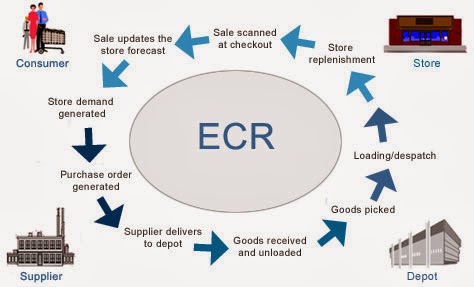

For instance, if you run a supermarket, your process consists of at least these major modules:

If you relax for a minute, you could probably brainstorm several ideas of how Google Glass can be applied in warehouse.

But let's focus on 1 link on that picture: "Goods received and unloaded". If you break it down into pieces, it seems to be a rather simple process:

We combined both of the approaches. And that's what we created:

Pretty cool, yeah? Here you can see automation of all basic tasks we mentioned above. It is incredible how useful Google Glass is for warehouse automation.

In the next section we're going to dive deep into technical details, so in case you're software engineer you can find some interesting pieces of code below.

So, as usual, add permissions to

Glass is a beta product, so you need some hacks. When you implement

P.S. Unfortunately, Glass has now just 1 focus mode -- "infinity". I hope, things will get better in the future.

As you can remember, we want to avoid manual input of specific data from packages. Some groceries have expiry date. Let's implement recognition of expiry date via voice. In this way, a person would take a package with both hands, scan a barcode, say expiry date while handling a package and get back to another package.

From technical standpoint, we need to solve 2 issues:

Here's how you can use it in your project. Add

P.S. You can find full source code for this example here: https://github.com/eleks/glass-warehouse-automation.

Well, one of our customers does.

For instance, if you run a supermarket, your process consists of at least these major modules:

- Online order management & checkout.

- Order packaging.

- Incoming goods processing and warehouse logistics.

- Order delivery.

Source: http://www.igd.com/our-expertise/Supply-chain/In-store/3459/On-Shelf-Availability

If you relax for a minute, you could probably brainstorm several ideas of how Google Glass can be applied in warehouse.

But let's focus on 1 link on that picture: "Goods received and unloaded". If you break it down into pieces, it seems to be a rather simple process:

- Truck comes to your warehouse.

- You unload the truck.

- Add all items into your Warehouse Management system.

- Place all items into specific place inside the warehouse.

- pick a box with both of your hands

- handle it to the shelf

- go back to the truck & repeat

We combined both of the approaches. And that's what we created:

Pretty cool, yeah? Here you can see automation of all basic tasks we mentioned above. It is incredible how useful Google Glass is for warehouse automation.

In the next section we're going to dive deep into technical details, so in case you're software engineer you can find some interesting pieces of code below.

Working with Camera in Google Glass

We wanted to let person grab a package and scan it's barcode at the same time. First of all, we tried implementing barcode scanning routines for Glass. That's great that Glass is actually an Android device.So, as usual, add permissions to

AndroidManifest.xml, initialize your camera and have fun.<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.camera.autofocus" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

Glass is a beta product, so you need some hacks. When you implement

surfaceChanged(...) method, don't forget to add parameters.setPreviewFpsRange(30000, 30000); call. Eventually, your surfaceChanged(...) should look like this:public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

...

Camera.Parameters parameters = mCamera.getParameters();

Camera.Size size = getBestPreviewSize(width, height, parameters);

parameters.setPreviewSize(size.width, size.height);

parameters.setPreviewFpsRange(30000, 30000);

mCamera.setParameters(parameters);

mCamera.startPreview();

...

}

P.S. Unfortunately, Glass has now just 1 focus mode -- "infinity". I hope, things will get better in the future.

Working with Barcodes in Google Glass

Once you see a clear picture inside your prism, let's proceed with barcode scanning. There're some barcode scanning libraries out there:zxing, zbar, etc.

We grabbed a copy of zbar library and integrated it into our project.- Download a copy of it.

- Copy

armeabi-v7afolder andzbar.jarfile intolibsfolder of your project. - Use it with camera:

static {

System.loadLibrary("iconv");

}

onCreate(...) of your activity:setContentView(R.layout.activity_camera);

// ...

scanner = new ImageScanner();

scanner.setConfig(0, Config.X_DENSITY, 3);

scanner.setConfig(0, Config.Y_DENSITY, 3);

Camera.PreviewCallback instance like this.

You'll scan image and receive scanning results in it.Camera.PreviewCallback previewCallback = new Camera.PreviewCallback() {

public void onPreviewFrame(byte[] data, Camera camera) {

Camera.Size size = camera.getParameters().getPreviewSize();

Image barcode = new Image(size.width, size.height, "NV21");

barcode.setData(data);

barcode = barcode.convert("Y800");

int result = scanner.scanImage(barcode);

if (result != 0) {

SymbolSet syms = scanner.getResults();

for (Symbol sym : syms) {

doSmthWithScannedSymbol(sym);

}

}

}

};

barcode.convert("Y800") call and scanner would still work.

Just keep in mind that Android camera returns images in NV21 format by default.

zbar's ImageScanner supports only Y800 format.

That's it.

Now you can scan barcodes with your Glass :)Handling Voice input in Google Glass

Apart from Camera, Glass has some microphones, which let you control it via voice. Voice control looks natural here, although people around you would find it disturbing. Especially, when it can't recognize "ok glass, google what does the fox say" 5 times in a row.As you can remember, we want to avoid manual input of specific data from packages. Some groceries have expiry date. Let's implement recognition of expiry date via voice. In this way, a person would take a package with both hands, scan a barcode, say expiry date while handling a package and get back to another package.

From technical standpoint, we need to solve 2 issues:

- perform speech to text recognition

- perform date extraction via free-form text analysis

Intent intent = new Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH);

intent.putExtra(RecognizerIntent.EXTRA_PROMPT, "Say expiry date:");

startActivityForResult(intent, EXPIARY_DATE_REQUEST);

onActivityResult(...) in your Activity, of course:@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

if (resultCode == Activity.RESULT_OK && requestCode == EXPIARY_DATE_REQUEST) {

doSmthWithVoiceResult(data);

} else {

super.onActivityResult(requestCode, resultCode, data);

}

}

public void doSmthWithVoiceResult(Intent intent) {

List<String> results = intent.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS);

Log.d(TAG, ""+results);

if (results.size() > 0) {

String spokenText = results.get(0);

doSmthWithDateString(spokenText);

}

}

- in 2 days

- next Thursday

- 25th of May

natty.

It does exactly this: it is a natural language date parser written in Java.

You can even try it online here.Here's how you can use it in your project. Add

natty.jar to your project.

If you use maven, then add it via:<dependency>

<groupId>com.joestelmach</groupId>

<artifactId>natty</artifactId>

<version>0.8</version>

</dependency>

libs folder you'll need natty with dependencies.

Download all of them:- stringtemplate-3.2.jar

- antlr-2.7.7.jar

- antlr-runtime-3.2.jar

- natty-0.8.jar

Parser class in your source:// doSmthWithDateString("in 2 days");

public static Date doSmthWithDateString(String text) {

Parser parser = new Parser();

List<DateGroup> groups = parser.parse(text);

for (DateGroup group : groups) {

List<Date> dates = group.getDates();

Log.d(TAG, "PARSED: " + dates);

if (dates.size() > 0) {

return dates.get(0);

}

}

return null;

}

natty does a pretty job of transforming your voice into Date instances.Instead of Summary

Glass is an awesome device, but it has some issues now. Even though it's still in beta, you'll get tons of joy, while developing apps for it! So grab a device, download SDK and have fun!P.S. You can find full source code for this example here: https://github.com/eleks/glass-warehouse-automation.

3/06/2014

FakeItEasy: be careful when wrapping an existing object

Isolation frameworks are very popular today — they are an important part of your automated tests. They allow to easily isolate dependencies you don’t control, such as file systems or network connections, using fake but controllable objects generated on-the-fly via Reflection. On my last project I used FakeItEasy — a nice framework with clean API. I like it very much (RIP, Moq)). This post was inspired by a real-life situation.

Fake for an already created object

Normally you create fakes for interfaces or abstract objects. But suppose you want to partially fake a concrete object that has virtual methods:Dog dog = A.Fake<Dog>();

Where the class Dog is implemented like this:

public class Dog

{

public virtual string Bark()

{

return "Bark!";

}

public virtual string BarkBark()

{

return Bark() + Bark();

}

}

public void Bark_DespiteExistingImplementation_ReturnsEmptyString()

{

Dog dog = A.Fake<Dog>();

string result = dog.Bark();

Assert.AreEqual("", result);

}Hmm... As you can see the method Bark() already has the logic that returns string "Bark!", but it is thrown away for the fake object, as if it were based on the completely abstract IDog interface. The same behavior applies to NSubstitute framework. Moq returns null instead of an empty string.

If you want the logic implemented in virtual methods to be preserved, you have to create a fake that wraps an existing object:

Dog dog = A.Fake<Dog>(x => x.Wrapping(realDog));

Lets write a test to confirm:

[Test]

public void Bark_ForWrappedObject_ReturnsImplementationFromThatObject()

{

Dog realDog = new Dog();

Dog dog = A.Fake<Dog>(x => x.Wrapping(realDog));

string result = dog.Bark();

Assert.AreEqual("Bark!", result);

}Great. Now, suppose you want to override the method Bark() on faked object to return the string "Quack!". Simple to do:

A.CallTo(() => dog.Bark()).Returns("Quack!");

And now the question: what do you expect to be returned from the method BarkBark()? It calls the virtual method Bark() that you overrode with A.CallTo() construct. But...

[Test]

public void BarkBark_ForOverriddenBark_UsesOverridenImplementation()

{

Dog realDog = new Dog();

Dog dog = A.Fake<Dog>(x => x.Wrapping(realDog));

A.CallTo(() => dog.Bark()).Returns("Quack!");

string result = dog.BarkBark();

// Not what you expected

Assert.AreEqual("Quack!Quack!", result); // Oops, "Bark!Bark!"

}Assert.AreEqual("Quack!Quack!", result); // Oops, "Bark!Bark!"

For those who know how virtual methods work this looks very counter-intuitive. So be aware of this behavior!

But if you do need to override the method Bark() in the traditional "virtual manner", what should you do? Unfortunately, FakeItEasy cannot help you in this situation. You have to manually code the FakeDog class and override the method Bark():

public class FakeDog : Dog

{

public override string Bark()

{

return "Quack!";

}

}

[Test]

public void BarkBark_ForManualFake_UsesOverridenImplementation()

{

Dog dog = new FakeDog();

string result = dog.BarkBark();

Assert.AreEqual("Quack!Quack!", result);

}By the way, with NSubstitute framework you'll experience the very same issue. Surprisingly, Moq has a simple solution to the problem — just set the CallBase property to true.

Conclusion

Try to avoid situations when your tests use the same object as SUT (System Under Test) and a fake. Like in our example: we tested the class Dog which was our SUT and we also faked a part of it (method Bark()). As you can see, this can lead to surprising results when used in combination with some of the popular isolation frameworks.2/21/2014

Why Google Glass will fail and why this won’t stop smartglasses’ success

Smartglasses are probably the most promising kind of wearable devices currently on the market. And Google Glass is the most interesting device among them. Yes, Google Glass is sexy and cool. It has great design; it is small and futuristic. After all, it feels like a typical Google product - simple and great. Nevertheless, I think it won't be successful as a product and will eventually fail.

Disclaimer: the following is my own opinion and does not express the official position of ELEKS or its R&D team. In fact, some of the team members argue with me a lot that Glass is the best device ever, it will conquer the world and other things like that. Of course, I'm exaggerating here a bit, but still it looks very likely for me that Glass will eventually fail as a commercial product.

So, what's the problem with Google Glass? Well, there are two aspects of it:

1. The device itself. I'll dwell upon its drawbacks in the next section.

2. Its positioning and marketing. I'll show you some interesting historic analogies below and try to project them on to the future.

So, what's wrong with the device? When you watch the ads or listen to Google employees who evangelize Glass, it looks so futuristic and cool that you're starting to believe the future is here and search for the Order button. But things change when you actually get it. We bought one for our R&D team a few months ago and... well, I wouldn't say it is a big disappointment but the device is very far from being market-ready. Here is the list of 5 things that we found very annoying about Google Glass:

Disclaimer: the following is my own opinion and does not express the official position of ELEKS or its R&D team. In fact, some of the team members argue with me a lot that Glass is the best device ever, it will conquer the world and other things like that. Of course, I'm exaggerating here a bit, but still it looks very likely for me that Glass will eventually fail as a commercial product.

So, what's the problem with Google Glass? Well, there are two aspects of it:

1. The device itself. I'll dwell upon its drawbacks in the next section.

2. Its positioning and marketing. I'll show you some interesting historic analogies below and try to project them on to the future.

Five Reasons Why Google Glass Sucks

So, what's wrong with the device? When you watch the ads or listen to Google employees who evangelize Glass, it looks so futuristic and cool that you're starting to believe the future is here and search for the Order button. But things change when you actually get it. We bought one for our R&D team a few months ago and... well, I wouldn't say it is a big disappointment but the device is very far from being market-ready. Here is the list of 5 things that we found very annoying about Google Glass:

2/10/2014

Data Science for Targeted Advertising: How to display relevant ads by leveraging past user behavior.

Online advertising industry is bigger than you thought

Past decade have seen a huge growth in online advertising. It is the huge industry with brands expected to pour almost over 30 billion of dollars in 2014. Online advertising provides companies with instant feedback and publishers has more knowledge about their users. Advertisers are very interested in precisely targeted ads. In particular they want to spend the smallest amount of money and get the maximum increase in profit. This is resolved by applying the targeted advertising. The problem involves determining where, when and to whom display particular advertisement on the Internet. Advertising systems deliver ads based on demographic, contextual or behavioral attributes. One of the examples are sponsored searches. It is most profitable business model on the web and accounts for the huge amount of income for the top search engines Google, Yahoo and Bing. It generates at least 25 billion dollars per year.

There are couple of usable methods to do targeted advertising:

- Demographic Targeting – this approach defines targeted audience by gender, age, income, location, etc. It is old and efficient approach, because it is easy to project behavior for products categories. Demographic targeting is popular since it’s easy to understand and implement. It provides advertiser transparence and control over the audience selected for targeting.

- Property Targeting – is a simple and popular targeting mechanism. The advertiser specifies set of pages where the ad should be shown. For example the company who sells tracks could show advertisement on website about vehicles.

- Behavioral Targeting – provides an approach to serve ads to users leveraging the past behavior of the user (searches, site visits, purchases). The most valuable resource for behavioral targeting is network traffic of particular user. The more such data you have, the better targeting result you will achieve. Thus, even local ISP companies can provide more accurate ads for consumer than Google or Yahoo.

Real-time bidding exchanges – de facto standard for targeted advertising

Online advertising industry has grown significantly during the past few years, with extensive usage of the real-time bidding exchanges (RTB). This auction website allows advertisers to bid on the opportunity to place the online display ads in real time. Advertisers are integrated with exchange system via API and collect variety of data to decide whether or not to bid and at what price. This has created a simple and efficient method for companies to target advertisements to particular users. As the industry standard, showing the display ad to the consumer is called “impression”. The auctions run in real time and instantly triggers when user navigates to the web page and taking place during the time the page if completely loaded in the user’s browser. During the auction, information about the location of the potential advertisement along with user information are passed to bidders in form of bid request. This data is often appended with information previously collected by advertisers about the user. When an auction starts, potential advertiser makes the decision if it wants to bid on this impression, at which price and what advertisement to show in case it wins the auction. There are billions of such real-time transactions each day and advertisers require large-scale solution to handle such auctions in milliseconds.

Such complicated ecosystems is a perfect opportunity for applying machine learning techniques, which play a key role in the ad bidding optimization process, increasing the targeting accuracy and reaching the ultimate goal from marketer’s perspective “Address the right browsers with the right message at the right moment and preferably at the right price”.

Improving ads relevance by applying Machine Learning techniques

The main task of machine learning system is to identify prospective customers – online users who have the higher propensity to purchase a specific product in near future after being displayed the advertisement. The ultimate goal is to build the system which will learn predictive models for each ad targeting automatically. One of the challenges of building such systems is that different ad campaigns could have different performance measures. However each of these criteria may be approximately represented as some ranking of potential purchases in terms of purchase propensity. A primary source of input features for behavioral targeting is user browser history, recorded as a set of web pages visited in the past. The target labels could be individual for each campaign and based on actual purchases of the specific product. From high level, this looks like an example of a straightforward predictive modeling problem. But if take a closer look, it appears, that it is impossible to obtain necessary amount of training data directly for this problem. First, probability of the purchase in next 7 days after seeing the ads is very low and is in range from 0.0000001 to 0.001, depending on the advertisement campaign. Second, the input feature vector includes more than one million features even in the simplest case (consider the user browsing history is encoded as set of hashed URLs). These dataset attributes involves difficulties in training process, however there are efficient approaches, which are designed to predict the consumer purchase propensity in such difficult circumstances.

Site visits as better purchase predictors than click through rate (CTR)

We know that probability of purchase after seeing the advertisement is rare event. This causes model training with highly imbalanced class distribution (skewed classes). The simplest and most widely used approach is to introduce proxy trained models. Currently, the most common proxy is clicks on advertisement. The efficiency of campaigns are often evaluated based on “click through rate” (CTR). As a result they are optimized towards increased CTR. In this approach clicks on advertisement are treated as positive samples. Hence instead of conversions, the model is trained using clicks, but the testset is still labeled by conversions. In recent study [1], this approach was tested against 10 different ad campaigns. The result implies that targeting based on clicks does not necessarily mean maximizing for conversions.

Figure 1. Improvement in prediction accuracy by using conversions for training instead of clicks. Testing is done using conversions in both cases.

Are there other good proxy candidates for evaluating and optimizing the advertising campaigns? Latest researches [2], answered this question. In contrast to clicks, site visits turned out generally to be good proxies for purchases. Specifically, site visits do remarkably well as the basis for building models to target browsers who will purchase subsequent to being shown the ad. Even is some cases the models trained on site visits are producing better results than one trained on conversions.

Figure 2. AUC performance distribution in with respect to purchase prediction of the models trained on clicks, site visits and purchases respectively.

The results show that site visitors are more likely tend to be the purchasers rather than ad clickers.

Dimensionality reduction techniques improve model accuracy.

As mentioned earlier another difficulty in predicting the purchase is huge input feature space, which typically requires the dimensionality reduction. In most cases ad targeting system tracks over 100 million unique URLs, and any of them could be used in predictive model. It’s very expensive to build and store such high-dimensional models. However, number of dimensionality reduction techniques are available nowadays, but not all of them are well suited for ad targeting problem.

The simplest method for massive binary feature space reduction is feature hashing. It transforms a bag of words into a bag of hashed IDs. Given a set of tokens and a hash function h(), we apply the hash function to each of the tokens and the new feature space is simply the set of hashed values. We can generate a column index for a given token with a hash function. The output of the hash function should be big enough to avoid collision with even a million unique tokens. The pseudocode is following:

function hash_vectorizer(features : string array, N : integer):

x := new vector[N]

for f in features:

h := hash(f)

x[h mod N] += 1

return x

Dimensionality reduction results from hash collisions. For example, if a URLs set contains {intel.com, nytimes.com, nyu.edu}, and we have h(“intel.com”) = 6, h(“nyu.edu”) = 6 and h(“nytimes.com”) = 8 then, in the new space, the hashed URLs set has values for features 6 and 8. Hash functions are typically 32-bit or 64-bit, and to project into an arbitrary k-dimensional feature space, we compute h() mod k.

Another approach is Contextual Categories. The web has a number of sources, both proprietary and free, that categorize specific web pages by their content. These categories serve as content-based groupings that can be used to reduce the dimensionality of the data. With category data, original feature space of URLs becomes a feature space of categories.

There are many other techniques for dimensionality reduction including Singular Value Decomposition (SVD) and Principal Component Analysis (PCA) which are proved to good alternatives for reducing the huge URL feature space.

Conclusion

This article made a brief overview of the targeted advertising business, which is the multibillion industry and grows dramatically. Most of the big players on the online advertising market are working with Real-time bidding systems (RTB), which connects advertisers and publisher. RTB act as online auction allowing advertisers to bid on the opportunity to place the online display ads for particular user in real time. Right now in industry the key metric for measuring success of ad camping is click through rate (CTR), however recent studies presented that site visits are better conversion predictor than CTR. At first sight the machine learning for target advertising seems to be trivial. But after looking at problem more precisely, one may notice underlying difficulties including rare conversion, lack of training data and highly dimensional input feature space. However number of researches have been conducted, which identified the efficient solutions for solving mentioned difficulties and providing good models for predicting future conversion events.

References

- S. Pandey, M. Aly, A. Bagherjeiran, A. Hatch, P. Ciccolo, A. Ratnaparkhi, and M. Zinkevich. Learning to target: What works for behavioral targeting.

- B. Dalessandro, R. Hook, C. Perlich, F. Provost. Evaluating and Optimizing Online Advertising: Forget the click, but there are good proxies.

- C. Perlich, B. Dalessandro, O. Stitelman, T. Raeder, F. Provost. Machine learning for targeted display advertising: Transfer learning in action.

1/06/2014

The Secret Ingredient of Business Development

Over the past 20 years in IT

business ELEKS has developed a “secret” ingredient that is deeply rooted in its

values and has helped greatly both the company and its customers. So let me share this secret ingredient with you.

We had learned that it is simply not enough to set a goal and just go for it. In order to maximize success we needed a vehicle that would be more reliable and independent of certain risks that exist in the software development industry. We have been applying it to everything since – the creation of software, workflow processes within the company as well as relationships with our customers. This key ingredient is – ecological systemic approach.

We had learned that it is simply not enough to set a goal and just go for it. In order to maximize success we needed a vehicle that would be more reliable and independent of certain risks that exist in the software development industry. We have been applying it to everything since – the creation of software, workflow processes within the company as well as relationships with our customers. This key ingredient is – ecological systemic approach.

12/27/2013

BYOX, Security, UX, Responsibility

There are 2 sides to adopting mobility. First is desire for innovation, the opportunity to drive better business with new technologies and thus increase revenue. Second is the fear of changes, the threat of holes in new processes which could result in enormous losses. Today I'd like to talk about the balance between these two sides, the balance between usability and security.

My company operates extremely sensitive data. Its exposure may result in my company's bankruptcy. I control its storage by locating data centers in my own premises. I control its flaw by using secure mailing services from providers I can trust. Recently I got acknowledged that this data is under a great threat, because employees use tools to keep it on their mobile device. Fortunately I've overcome this challenge by introducing MDM solution into everyone's device. I've protected the data from any other usages by strict policies, which all of the employees are obliged to follow.

The Conflict

We see a typical clash of interests. An employee just looks for the easiest way to get his job done. Security officer wants to make sure its done in a safe way. Both are right. So where is the solution?

Which one should change the attitude? A user, who has signed security policy and will respond for violating it, or security officer, who's responsible for any data leakage?

I believe from this point I'm switching from objective to subjective thinking.

The Irony

I'll rephrase the last question. If data gets leaked, who will suffer more? The employee, who gets fired, pays fine and potentially goes to jail, or the Company, which will go through numerous courts and potentially cease to exist? Or put it other way - who's more aware of this risk? Whose interest it is to protect the data? Who should adapt to the situation? You see where I'm driving at.

The irony is that regardless of how much we try, we can't force people to drop their habits, change their own ways of doing things and impose our rules. The best we can do, is encourage them to switch to a new way, which would conform them even better. And here's why

The Good, The Bad and The Lazy

Back in 2007, Apple did a wonderful thing. They made it so simple to use a mobile phone, and so functional in a meantime! This have set a very high standards of expectations from users. As things got simpler people got lazier. And now an app can't be considered competitive unless UX is intuitive. If people need to make more than trivial efforts to get what they want from app they simply quit and use another one. That's where we've brought ourselves, that's what consumerization of IT is.

The Clash

And now this big wave of consumerization is getting into the enterprise. The world where large systems with dozens of buttons on one screen and frankly complex UX has been a standard for years, gets hit by these small screens and highly trivial use cases. The employee and simplicity of his actions start to be the priority. Ignoring this priority means that employee will be much less productive, or worse - he'll find another way to complete his action, bypassing all our validation and security rules

The Responsibility

But using fancy apps is not the same as using enterprise software. Work is not always fun and simple. And that's the part where users have to realize their responsibility as an employee. This is the difference between enterprise and consumer apps. Employees still have to use the app, even if they don't like it.

Their productivity is another question though.

The End

It's not about who's responsible for failure, it's about avoiding it. It's just that in this case, limitations are less effective than flexibility. It's possible to achieve same security goals without losing much productivity.

The Reality

I'd like to conclude with a real life example of what happens when employees are forced to the job they don't want to. I witnessed it 2 weeks ago, after I started writing this article. It was the middle of Ukrainian revolution 2013, November 11th, Kiev. People were protesting for 3rd week, and one night government gave order to stop the protest by "cleaning" the protesters by force. But most of the policeman didn't feel like fighting their co-citizens, who didn't do nothing wrong. They still couldn't refuse following their orders, otherwise they'd be arrested themselves. So what it ended up with was a very weak attack from police, with policeman uncertainly pushing the people. This resulted in strong victory by the protesters, who stood their ground and defended themselves, thus total failure of government's plans

The Opportunity

(thoughts of a typical employee)

My day-to-day job requires lots of documents' processing and working with my company's internal tools. It also requires leaving to other offices, thus spending about 20% of my work time out of the office. I often save copies of documents on my laptop and mobile devices to be able to work with them on the move. It helps me doing my job faster. But recently all our department had to enroll for a program which forbids any storage of work documents. From now on I take pictures of most relevant documents. I realize that it's a security threat, but I'd better get my job done than conform to overrated security policies.

The Threat

(thoughts of security officer)

The Conflict

We see a typical clash of interests. An employee just looks for the easiest way to get his job done. Security officer wants to make sure its done in a safe way. Both are right. So where is the solution?

Which one should change the attitude? A user, who has signed security policy and will respond for violating it, or security officer, who's responsible for any data leakage?

I believe from this point I'm switching from objective to subjective thinking.

The Irony

I'll rephrase the last question. If data gets leaked, who will suffer more? The employee, who gets fired, pays fine and potentially goes to jail, or the Company, which will go through numerous courts and potentially cease to exist? Or put it other way - who's more aware of this risk? Whose interest it is to protect the data? Who should adapt to the situation? You see where I'm driving at.

The irony is that regardless of how much we try, we can't force people to drop their habits, change their own ways of doing things and impose our rules. The best we can do, is encourage them to switch to a new way, which would conform them even better. And here's why

The Good, The Bad and The Lazy

Back in 2007, Apple did a wonderful thing. They made it so simple to use a mobile phone, and so functional in a meantime! This have set a very high standards of expectations from users. As things got simpler people got lazier. And now an app can't be considered competitive unless UX is intuitive. If people need to make more than trivial efforts to get what they want from app they simply quit and use another one. That's where we've brought ourselves, that's what consumerization of IT is.

The Clash

And now this big wave of consumerization is getting into the enterprise. The world where large systems with dozens of buttons on one screen and frankly complex UX has been a standard for years, gets hit by these small screens and highly trivial use cases. The employee and simplicity of his actions start to be the priority. Ignoring this priority means that employee will be much less productive, or worse - he'll find another way to complete his action, bypassing all our validation and security rules

|

| thanks for pic @daveslocombe |

But using fancy apps is not the same as using enterprise software. Work is not always fun and simple. And that's the part where users have to realize their responsibility as an employee. This is the difference between enterprise and consumer apps. Employees still have to use the app, even if they don't like it.

Their productivity is another question though.

The End

It's not about who's responsible for failure, it's about avoiding it. It's just that in this case, limitations are less effective than flexibility. It's possible to achieve same security goals without losing much productivity.

The Reality

I'd like to conclude with a real life example of what happens when employees are forced to the job they don't want to. I witnessed it 2 weeks ago, after I started writing this article. It was the middle of Ukrainian revolution 2013, November 11th, Kiev. People were protesting for 3rd week, and one night government gave order to stop the protest by "cleaning" the protesters by force. But most of the policeman didn't feel like fighting their co-citizens, who didn't do nothing wrong. They still couldn't refuse following their orders, otherwise they'd be arrested themselves. So what it ended up with was a very weak attack from police, with policeman uncertainly pushing the people. This resulted in strong victory by the protesters, who stood their ground and defended themselves, thus total failure of government's plans

12/18/2013

Solving the Santa Claus problem using Ocaml

Santa’s problem

Not much time left till Xmas.. Haven’t you ever wondered how Santa is doing all his business with those elves and reindeer? And why?

Even if you don’t care, there are people who do. At least one. John Trono of St. Michael’s College in Vermont was so much worried about how Santa can handle all that stuff, that he decided to emulate his work and wrote quite a simplified scenario of Santa’s life:

Besides given scenario, lets make some additional specifications:

Not very complicated, as you can see, but till the moment you try to implement it. To make the solution not that boring, I’ve decided to implement it in Ocaml, but not in any enterprise platform like .NET or Java. At the moment I’m writing this post, I haven’t managed to find an Ocaml solution on the internet. Ocaml is an ML-derived functional language with static typing, pattern matching and automatic garbage collection. It has fairly big standard library and nice native code compiler for a number of platforms. However, I’ve chosen it just to make solving Santa’s problem a bit more challenging and interesting, another words, just for fun. I’ll try to comment lines of code looking weird so don’t panic.

Pseudo-code solution

First, let’s solve Santa’s problem using pseudo-code. We’ll use elves and reindeer counters protected by a mutex, a semaphore for Santa (he waits until either an elf or reindeer signals him), a semaphore for reindeers (they wait until Santa signals them to get hitched), semaphore for elves (they wait until Santa helps them) and a mutex to prevent additional elves to enter while three elves are being helped.

Santa’s code is the easiest one (it runs in an infinite loop)

Santa checks two conditions and either deals with elves or with reindeer. If there’re nine reindeer waiting, Santa prepares sleigh and signals reindeer semaphore nine times, allowing the reindeer to invoke get_hitched. If there are elves waiting, Santa invokes help_elves.

Code for reindeer is also not very complicated:

The ninth reindeer signals Santa and then joins the others waiting for reindeer_sem. When it’s signalled, they invoke get_hitched.

The code for elves is quite similar, but it uses another turnstile for three-elves-stuff:

The first two elves release elf_mutex at the same time they release the mutex, but the last elf holds elf_mutex, preventing other elves from entering until all three elves have invoked get_help. The last elf to leave releases elf_mutex, allowing the next batch of elves to enter.

The Ocaml part

Now the time come to have some fun with Ocaml. First thing to mention is that Ocaml does not have any semaphore built-in class-or-something (it’s because of it’s rich standard library). But it’s not a big issue since it has Mutex and Condition classes (yeah, Ocaml is an Objective Caml and it do has classes) in the Threads library and we can use them to write our own semaphore. To make a semaphore more or less serious, let’s write it in the separate module.

My semaphore has internal mutable (yeah, Ocaml is not a pure functional language like Haskell is) field count (used for a “gate width“ for threads to enter semaphore simultaneously), internal name (used for logging, when I was looking for a deadlock in my code some time ago), one mutex and one condition variable. It has two primary methods: signal with optional parameter N and wait which are just usual increment-decrement/lock-unlock methods of semaphore: signal increments internal counter and if it’s positive, it allows threads to enter critical section which given semaphore guards and wait decrements counter and if it’s zero, calling thread is blocked until counter becomes positive.

If an Ocaml program is split into modules then you can expect a big fun trying to compile that program. First, you have to generate interfaces for that module. Secondly, you have to compile both interface and the module itself:

ocamlc -thread unix.cma threads.cma -i semaphore.ml > semaphore.mli

ocamlc -thread unix.cma threads.cma -c semaphore.mli

ocamlc -thread unix.cma threads.cma -c semaphore.ml

To enable multithreading support (in terms of Posix compatible threads) you have to compile your code with -thread key and include unix and threads compiled modules.

Now let’s write main program. Since we’re dealing with logging and multithreading, I’ve written a function-helper, which uses our Semaphore class to synchronize printing to stdout:

Next, I’m using some kind of transport structure for Santa, reindeer and elves functions (shared between them and protected by semaphore). This structure (a record in terms of Ocaml) contains counters and semaphores as discussed earlier:

and a simple initializer:

To make our example more realistic I’ve implemented functions prepare_sleigh and others to see what’s actually happens using my helper for synchronized printing:

You might thought right now that braces () in the end of each function are kind of usual braces like in Java, C++ etc, but actually it’s an argument of type unit of each function. Please, refer to the tutorials for more details.

Let’s take a look on our pseudo-code solutions implemented in Ocaml:

You can notice that santa_role_func accepts one parameter c (our transport structure), but two others accept two parameters. It’s because Santa’s role function is running in a loop and others are running just one time. Second parameter in elves and reindeer functions is an index of a thread in which they’re running (for debug and visualization purposes).

The last (except of compilation) step to implement is to make all this stuff work together:

Code above creates three arrays of threads: santa_array (which always contains just one element), reindeer_array (always contains 9 reindeer threads) and elf_array (which contains 20 (fairly chosen) elves threads). After each thread is started, main program joins all of them using humble functional magic with Array.Iter.

What had happened on the North Pole

I’ve copied typical stdout from a santa_problem solution below (and the ocaml version for the clarification).

> ocaml -version

The OCaml toplevel, version 4.01.0

> ./build.sh

> ./santa_problem

Starting santa loopStarting reindeer (4)

Starting reindeer (5)

Starting reindeer (6)

Starting reindeer (3)

Starting reindeer (7)

Starting reindeer (8)

Starting elf [0]

Starting reindeer (2)

Starting elf [1]

Starting elf [2]

Starting elf [3]

Starting elf [4]

Starting reindeer (1)

Starting elf [5]

Starting elf [6]

Starting elf [7]

Starting elf [8]

Starting elf [9]

Starting elf [10]

Starting elf [11]

Starting elf [12]

Starting reindeer (0)

Starting elf [13]

Starting elf [14]

Starting elf [15]

Starting elf [19]

Prepare sleigh

Starting elf [16]

Starting elf [18]

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Starting elf [17]

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

……

Merry Xmas

Santa’s problem is one of the classical synchronization problems and is worth looking into. A lot of solutions exist nowadays. For example, there is an interesting approach to solve it in Haskell using Software Transactional Memory (STM). Despite the fact Ocaml does not provide us with as cool features as STM, we can see that building parallel programs in Ocaml iseasy fun! As far as I can see, the solution above is kind of first pure Ocaml solution of Santa’s problem.

You can download all code from the Santa's gist.

Not much time left till Xmas.. Haven’t you ever wondered how Santa is doing all his business with those elves and reindeer? And why?

Even if you don’t care, there are people who do. At least one. John Trono of St. Michael’s College in Vermont was so much worried about how Santa can handle all that stuff, that he decided to emulate his work and wrote quite a simplified scenario of Santa’s life:

“Santa Claus sleeps in his shop at the North Pole and can only be awakened by either (1) all nine reindeer being back from their vacation in the South Pacific, or (2) some of the elves having difficulty making toys; to allow Santa to get some sleep, the elves can only wake him when three of them have problems. When three elves are having their problems solved, any other elves wishing to visit Santa must wait for those elves to return. If Santa wakes up to find three elves waiting at his shop’s door, along with the last reindeer having come back from the tropics, Santa has decided that the elves can wait until after Christmas, because it is more important to get his sleigh ready. (It is assumed that the reindeer do not want to leave the tropics, and therefore they stay there until the last possible moment.) The last reindeer to arrive must get Santa while the others wait in a warming hut before being harnessed to the sleigh.”

Besides given scenario, lets make some additional specifications:

- After the ninth reindeer arrives, Santa must invoke prepare_sleigh, and then all nine reindeer must invoke get_hitched

- After the third elf arrives, Santa must invoke help_elves. Concurrently, all three elves should invoke get_help.

- All three elves must invoke get_help before any additional elves enter

Not very complicated, as you can see, but till the moment you try to implement it. To make the solution not that boring, I’ve decided to implement it in Ocaml, but not in any enterprise platform like .NET or Java. At the moment I’m writing this post, I haven’t managed to find an Ocaml solution on the internet. Ocaml is an ML-derived functional language with static typing, pattern matching and automatic garbage collection. It has fairly big standard library and nice native code compiler for a number of platforms. However, I’ve chosen it just to make solving Santa’s problem a bit more challenging and interesting, another words, just for fun. I’ll try to comment lines of code looking weird so don’t panic.

Pseudo-code solution

First, let’s solve Santa’s problem using pseudo-code. We’ll use elves and reindeer counters protected by a mutex, a semaphore for Santa (he waits until either an elf or reindeer signals him), a semaphore for reindeers (they wait until Santa signals them to get hitched), semaphore for elves (they wait until Santa helps them) and a mutex to prevent additional elves to enter while three elves are being helped.

Santa’s code is the easiest one (it runs in an infinite loop)

santa_sem.wait() mutex.wait() if reindeer == 9 { prepare_sleigh() reindeer_sem.signal(9) } else if elves == 3 { help_elves() elf_sem.signal(3) } mutex.signal()

Santa checks two conditions and either deals with elves or with reindeer. If there’re nine reindeer waiting, Santa prepares sleigh and signals reindeer semaphore nine times, allowing the reindeer to invoke get_hitched. If there are elves waiting, Santa invokes help_elves.

Code for reindeer is also not very complicated:

mutex.wait() reindeer += 1 if reindeer == 9 { santa_sem.signal() } mutex.signal() reindeer_sem.wait() get_hitched()

The ninth reindeer signals Santa and then joins the others waiting for reindeer_sem. When it’s signalled, they invoke get_hitched.

The code for elves is quite similar, but it uses another turnstile for three-elves-stuff:

elf_mutex.wait() mutex.wait() elves += 1 if elves == 3 { santa_sem.signal() } else { elf_mutex.signal() } mutex.signal() elf_sem.wait() get_help() mutex.wait() elves -= 1 if elves == 0 { elf_mutex.signal() } mutex.signal()

The first two elves release elf_mutex at the same time they release the mutex, but the last elf holds elf_mutex, preventing other elves from entering until all three elves have invoked get_help. The last elf to leave releases elf_mutex, allowing the next batch of elves to enter.

The Ocaml part

Now the time come to have some fun with Ocaml. First thing to mention is that Ocaml does not have any semaphore built-in class-or-something (it’s because of it’s rich standard library). But it’s not a big issue since it has Mutex and Condition classes (yeah, Ocaml is an Objective Caml and it do has classes) in the Threads library and we can use them to write our own semaphore. To make a semaphore more or less serious, let’s write it in the separate module.

module Semaphore = struct class semaphore initial_count initial_name = object (self) val mutable count = initial_count val name = initial_name val sync = Mutex.create() val cond = Condition.create() method inc n = count <- count + n method dec n = count <- count - n method signal ?(n=1) () = Mutex.lock sync; self#inc n; for i = 1 to n do Condition.signal cond done; Mutex.unlock sync method wait = Mutex.lock sync; while count = 0 do Condition.wait cond sync done; self#dec 1; Mutex.unlock sync end end;;

My semaphore has internal mutable (yeah, Ocaml is not a pure functional language like Haskell is) field count (used for a “gate width“ for threads to enter semaphore simultaneously), internal name (used for logging, when I was looking for a deadlock in my code some time ago), one mutex and one condition variable. It has two primary methods: signal with optional parameter N and wait which are just usual increment-decrement/lock-unlock methods of semaphore: signal increments internal counter and if it’s positive, it allows threads to enter critical section which given semaphore guards and wait decrements counter and if it’s zero, calling thread is blocked until counter becomes positive.

If an Ocaml program is split into modules then you can expect a big fun trying to compile that program. First, you have to generate interfaces for that module. Secondly, you have to compile both interface and the module itself:

ocamlc -thread unix.cma threads.cma -i semaphore.ml > semaphore.mli

ocamlc -thread unix.cma threads.cma -c semaphore.mli

ocamlc -thread unix.cma threads.cma -c semaphore.ml

To enable multithreading support (in terms of Posix compatible threads) you have to compile your code with -thread key and include unix and threads compiled modules.

Now let’s write main program. Since we’re dealing with logging and multithreading, I’ve written a function-helper, which uses our Semaphore class to synchronize printing to stdout:

let stdout_sem = new Semaphore.semaphore 1 "stdout_sem";; let puts s = stdout_sem#wait; Printf.printf "%s\n" s; flush stdout; stdout_sem#signal ();;

Next, I’m using some kind of transport structure for Santa, reindeer and elves functions (shared between them and protected by semaphore). This structure (a record in terms of Ocaml) contains counters and semaphores as discussed earlier:

type santa_counters = { mutable elves : int; mutable reindeer : int; santa_sem : Semaphore.semaphore; reindeer_sem : Semaphore.semaphore; elf_sem : Semaphore.semaphore; elf_mutex : Semaphore.semaphore; mutex : Semaphore.semaphore };;

and a simple initializer:

let new_santa_counters () = { elves = 0; reindeer = 0; santa_sem = new

Semaphore.semaphore 0 "santa_sem"; reindeer_sem = new

Semaphore.semaphore 0 "reindeer_sem"; elf_sem = new

Semaphore.semaphore 0 "elf_sem"; elf_mutex = new

Semaphore.semaphore 1 "elf_mutex"; mutex = new

Semaphore.semaphore 1 "mutex" };;

To make our example more realistic I’ve implemented functions prepare_sleigh and others to see what’s actually happens using my helper for synchronized printing:

let prepare_sleigh () = puts "Prepare sleigh";; let help_elves () = puts "Help Elves";; let get_hitched () = puts "Get Hitched";; let get_help () = puts "Get Help";;

You might thought right now that braces () in the end of each function are kind of usual braces like in Java, C++ etc, but actually it’s an argument of type unit of each function. Please, refer to the tutorials for more details.

Let’s take a look on our pseudo-code solutions implemented in Ocaml:

let santa_role_func c = c.santa_sem#wait; c.mutex#wait; if c.reindeer = 9 then ( prepare_sleigh (); c.reindeer_sem#signal ~n:9 (); c.reindeer <- 0; ) else if c.elves = 3 then ( help_elves (); c.elf_sem#signal ~n:3 () ); c.mutex#signal ();; let reindeer_role_func (c, i) = let s = Printf.sprintf

"Starting reindeer (%d)" i in puts s; c.mutex#wait; c.reindeer <- c.reindeer + 1; if c.reindeer = 9 then c.santa_sem#signal (); c.mutex#signal (); c.reindeer_sem#wait; get_hitched ();; let elves_role_func (c, i) = let s = Printf.sprintf

"Starting elf [%d]" i in puts s; c.elf_mutex#wait; c.mutex#wait; c.elves <- c.elves + 1; if c.elves = 3 then c.santa_sem#signal () else c.elf_mutex#signal (); c.mutex#signal (); c.elf_sem#wait; get_help (); c.mutex#wait; c.elves <- c.elves - 1; if c.elves = 0 then c.elf_mutex#signal (); c.mutex#signal ();;

You can notice that santa_role_func accepts one parameter c (our transport structure), but two others accept two parameters. It’s because Santa’s role function is running in a loop and others are running just one time. Second parameter in elves and reindeer functions is an index of a thread in which they’re running (for debug and visualization purposes).

The last (except of compilation) step to implement is to make all this stuff work together:

let c = new_santa_counters () in let santa_loop () = puts "Starting Santa loop"; while true do santa_role_func c; done in let santa_array = [| Thread.create santa_loop () |] and reindeer_array = Array.init 9

(fun i -> Thread.create reindeer_role_func (c, i)) and elf_array = Array.init 20

(fun i -> Thread.create elves_role_func (c, i)) in Array.iter Thread.join

(Array.concat [santa_array; reindeer_array; elf_array]);;

Code above creates three arrays of threads: santa_array (which always contains just one element), reindeer_array (always contains 9 reindeer threads) and elf_array (which contains 20 (fairly chosen) elves threads). After each thread is started, main program joins all of them using humble functional magic with Array.Iter.

What had happened on the North Pole

I’ve copied typical stdout from a santa_problem solution below (and the ocaml version for the clarification).

> ocaml -version

The OCaml toplevel, version 4.01.0

> ./build.sh

> ./santa_problem

Starting santa loopStarting reindeer (4)

Starting reindeer (5)

Starting reindeer (6)

Starting reindeer (3)

Starting reindeer (7)

Starting reindeer (8)

Starting elf [0]

Starting reindeer (2)

Starting elf [1]

Starting elf [2]

Starting elf [3]

Starting elf [4]

Starting reindeer (1)

Starting elf [5]

Starting elf [6]

Starting elf [7]

Starting elf [8]

Starting elf [9]

Starting elf [10]

Starting elf [11]

Starting elf [12]

Starting reindeer (0)

Starting elf [13]

Starting elf [14]

Starting elf [15]

Starting elf [19]

Prepare sleigh

Starting elf [16]

Starting elf [18]

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Get Hitched

Starting elf [17]

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

Help Elves

Get Help

Get Help

Get Help

……

Merry Xmas

Santa’s problem is one of the classical synchronization problems and is worth looking into. A lot of solutions exist nowadays. For example, there is an interesting approach to solve it in Haskell using Software Transactional Memory (STM). Despite the fact Ocaml does not provide us with as cool features as STM, we can see that building parallel programs in Ocaml is

You can download all code from the Santa's gist.

Subscribe to:

Comments (Atom)